Deepfake Technology: The Dangers & Benefits of AI-Generated Media

AI is transforming various sectors, such as healthcare, finance, entertainment, and cybersecurity. Some of the most exciting and controversial developments include deepfake technology. The word “deepfake” derives from deep learning and fake, referring to the increasingly realistic media manipulated by AI to change videos, images, and audio. It makes content that is realistic but false. First developed for research and entertainment, deepfake tech has become ubiquitous, prompting ethical discussions and security concerns.

Deepfake technology is a two-edged sword. It presents thrilling prospects in filmmaking and personalised content. But while doing so, it carries significant risks, such as misinformation, identity theft, and the loss of in-built trust in digital identity. As deepfake videos become more challenging to detect, society must understand the potential positive and negative impact of the emerging AI tool.

This blog will cover the significant benefits and risks of AI-generated media, the implications for digital forensics, and tips for being a responsible traveller adventurer in this new landscape of deepfakes.

The Benefits of Deepfake Technology

The Creative Potential of AI-Generated Content

Deepfake technology is transforming entertainment, media, and content creation. With AI-driven video and audio synthesis, filmmakers and creators can enhance storytelling in new ways:

- Film & Television: Hollywood uses AI-generated media for digital de-aging, posthumous performances, and scene manipulation. For instance, deepfake tech recreated a younger Luke Skywalker in The Mandalorian, giving audiences a seamless experience.

- Historical Resurrections: AI-generated content can revive historical figures, making history more engaging in documentaries and museums. Imagine watching a realistic video of Abraham Lincoln delivering the Gettysburg Address.

- Advertising & Marketing: Brands can use deepfakes to personalise ads, creating AI-generated influencers tailored to specific audiences.

With deepfake tools now more accessible, independent creators and small-budget productions can achieve high-quality results once reserved for significant studios. This democratisation fosters inclusivity and diverse storytelling in media.

Enhancing Accessibility and Personalization

Another notable advantage of deepfake technology is its ability to improve accessibility and inclusivity:

- Sign Language Interpretation: In real time, AI-generated sign language avatars can translate spoken dialogue into sign language.

- Audio Descriptions for the Visually Impaired: Deepfake-generated voiceovers can turn visuals into spoken narratives, enhancing accessibility in film, education, and news.

- Multilingual Dubbing & Real-Time Translation: Deepfake voice technology allows for seamless dubbing of performances in multiple languages, preserving natural expressions and intonation. This improves global access to content.

- Education & Training: AI-generated tutors and virtual assistants can personalise learning experiences to meet individual student needs.

Integrating AI-generated media into accessibility solutions can break communication barriers and enhance inclusivity in the digital space.

The Dangers of Deepfake Technology

The Threat of Misinformation & Fake News

While deepfake technology can entertain and educate, it poses a serious risk of misinformation and deception. The ability to create convincing fake videos and audio raises ethical concerns about digital trust and information integrity:

- Political Manipulation: A fake video of a political leader could influence elections, incite unrest, or damage reputations.

- Corporate Fraud: Deepfake audio mimicking a CEO’s voice could authorise fraudulent transactions, threatening businesses.

- Fake News & Public Confusion: Deepfake videos can spread misinformation rapidly, making it harder for the public to distinguish reality from fabrication.

A study by MIT found that false news spreads six times faster than real news on social media, amplifying the risks posed by deepfake-generated misinformation.

Challenges for Digital Forensics and Security

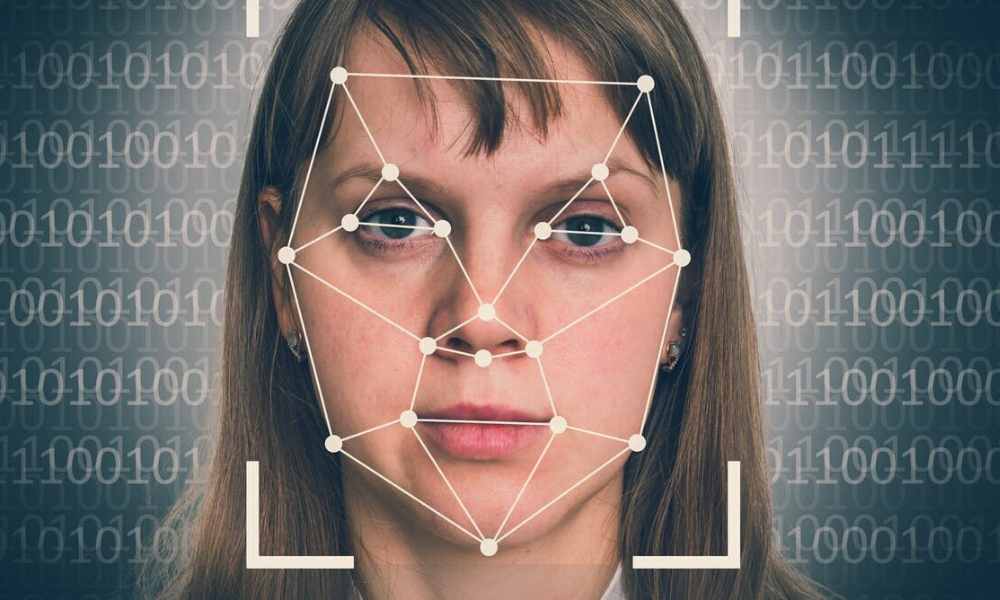

As deepfakes grow more sophisticated, digital forensic experts face significant challenges in detecting manipulated media. Current detection methods include:

- AI-Based Deepfake Detection Tools: Companies like Microsoft and Facebook are developing automated tools that analyse pixel inconsistencies and unnatural facial movements.

- Blockchain for Media Verification: Some organisations are testing blockchain technology to create verifiable records of authentic media.

- Watermarking & Metadata Tracking: Invisible digital watermarks in genuine content could be an authentication mechanism against deepfake tampering.

Despite these efforts, the arms race between deepfake creators and digital forensic experts continues, requiring ongoing innovation to combat AI-generated deception.

How to Navigate the Deepfake Landscape

Recognising Deepfakes: Signs & Detection Methods

While deepfake videos are becoming more advanced, some signs can help identify them:

- Unnatural Facial Movements – Lips may not sync with speech, or blinking may seem odd.

- Inconsistencies in Lighting & Shadows – AI-generated faces struggle with realistic lighting and shadowing.

- Blurred or Glitchy Edges – Look for inconsistencies around the face during quick movements.

- Mismatched Audio & Speech Patterns – If the voice sounds robotic or mismatched with the mouth, it may be AI-generated.

Mitigating the Spread of Misinformation

To combat deepfake misinformation, individuals and organisations should take proactive steps:

- Verify Sources: Always cross-check videos or statements with credible news outlets.

- Support Digital Literacy Programs: Promote education on media literacy so people can critically assess online content.

- Fact-Checking & Reporting Tools: Use tools like Deepware Scanner and Sensity AI to identify manipulated content.

- Encourage Regulatory Measures: Advocate for legal frameworks that hold creators of harmful deepfakes accountable.

Expert Tips & Common Mistakes to Avoid

Best Practices for Ethical Use of AI-Generated Media

- Transparency is Key – Clearly label AI-generated content.

- Respect Privacy – Get consent before using someone’s likeness.

- Use AI for Positive Impact – Focus on ethical applications in education, accessibility, and entertainment.

Common Mistakes & Misconceptions

- Assuming All Deepfakes are Malicious – While some are deceptive, many serve legitimate purposes in entertainment and accessibility.

- Overlooking Education – Raising awareness about deepfakes is key to preventing misinformation.

The Future of Deepfake Technology

Deepfake technology is an innovative breakthrough and a digital security threat. As AI-generated media develops, a delicate balance between creativity and accountability is needed. Deepfakes improve storytelling and accessibility but also endanger information integrity and security.

To help secure a responsible future in the global age of the media, we are likely to see in the era of AI generation, we must:

- Raise awareness about deepfake detection

- Promote transparency in AI-generated media

- Encourage regulations to prevent potential abuse

- Use AI to support content creation and accessibility

As deepfake technology becomes more common, how will you approach AI-generated media responsibly? Join the conversation and share your thoughts on the future of deepfakes.